We studied what artificial intelligence is last time. Simply put, what artificial intelligence was, you can sort it out to a "system for creating human intelligence."

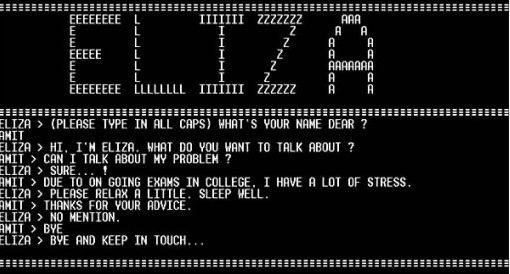

The history of artificial intelligence began in the 1950s when Turing machines came out. Later in 1965, a chatbot system called Alisa was developed. Alisa is also known as artificial intelligence, but it was not the kind of AI that we thought would acquire knowledge by learning for ourselves because it was actually just a program that could branch out every situation and answer any situation.

XOR problem of Perceptron

= Cannot process beta logical circuit

As the history of AI begins, the development of AI, which seemed to be successful, faces a problem and faces a recession. On the one hand, it is said to be an XOR problem of Perceptron, and those who first started artificial intelligence now will naturally not know what Perceptron is, so you can just ignore it. I'll post it later, but right now, you can think of it as a solution that mimics a tiny neuron to implement AI.

Looking at the graph of the left-most XOR gate, there was a problem in which the results of + and - could not be divided into one straight line (linear). If you look at the OR gate and the AND gate can distinguish between + and - with a single line, XOR is impossible!! So I wondered if the history of AI, which had been popular, would end like this, but Professor Marvin Minsky said, "We can solve it using multi-layer."

MLP (Multilayer Layer Perceptron)

Using two layers (blue) instead of one-layer layers as shown above, it has been revealed that XOR results can be divided into two straight lines, as in the far right graph. However, at that time it was theoretically possible to model the above concept, but it was too complex to actually implement it. (You don't have to understand what the picture above is. I will study properly later on, so non-specialists just have to be aware that there is a model in shape.

In addition, he pointed out the limitations of this MLP model because it is a model that cannot be taught. However, this problem is solved through the reverse error propagation method in the future.

AI stagnation period

1970~1990

That's how the first AI winters, or AI winters, came in 1974 to 1980, and then the second AI winters from 1987 to 1993. This is the time when AI's development was stagnant.

In a chess game called DeepBlue, which was created by IBM in 1997, he defeated then-world chess champion Gary Kasparov in a showdown in a game he thought was only human territory. But at the time, Deep Blue was able to win by learning all the cases that could come out of the chess game, but if you put them into Go, the number of cases would be as large as the number of atoms in the universe, making them impossible to learn.

Thus, models that investigate and learn all situations like Deep Blue have limitations in applying them to other areas, which was shocking to the public, but not enough to change the paradigm academically.

the advent of the Neural Network

Artifical Neural Network : ANN

Over time, a model called artificial neural network came out. Using this model, we were able to solve the XOR problem in the way Professor Marvin Minsky said, using multi-layers. (Let's find out more about Neural Networks in future postings.)

In 2009, Google started the car in the above manner to build a self-driving car, and time passed so that AlphaGo of DeepMind, a subsidiary of Google, won the Go field that it thought was a real human domain in 2016, shocking the world.

Since then, there is no area where AI can't do it anymore. It has been confirmed that A can also be used in the realm of creation, which was thought to be a human domain. So far, we have briefly learned the history of artificial intelligence. Let's talk about machine learning and deep learning in earnest next time.

[Deep Learning] #2 History of Artificial Intelligence / XOR Problem of Perceptron / Artificial Neural Network (ANN)

#Deeplearning #Basic #Artificialintelligence #Perceptron #XORProblem #Artificialneuralnetwork #ANN

![[Deep Learning] #2 History of Artificial Intelligence / XOR Problem of Perceptron / Artificial Neural Network (ANN)](https://img1.daumcdn.net/thumb/R750x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdna%2FBairQ%2FbtqHcfarqvk%2FAAAAAAAAAAAAAAAAAAAAAGqynCtvKmC1ZZeaGArJeaQE3Q--f96XOeMQANxU9qhM%2Fimg.png%3Fcredential%3DyqXZFxpELC7KVnFOS48ylbz2pIh7yKj8%26expires%3D1772290799%26allow_ip%3D%26allow_referer%3D%26signature%3DBvjd6w%252BYB%252FaYMqgfiDtAMBWHZbk%253D)